- • Bentley-MicroStation CAD

Andere Aktivitäten

- • European Airlink Association

- • Tagesordnung: Gründung EAA

- • Erneuerbarer Energien-Ungarn

- • HPSU - doc.Version

- • HPSU - html Version.

- • REDUCTION OF CO2 - (pdf)

- • Generating Answers

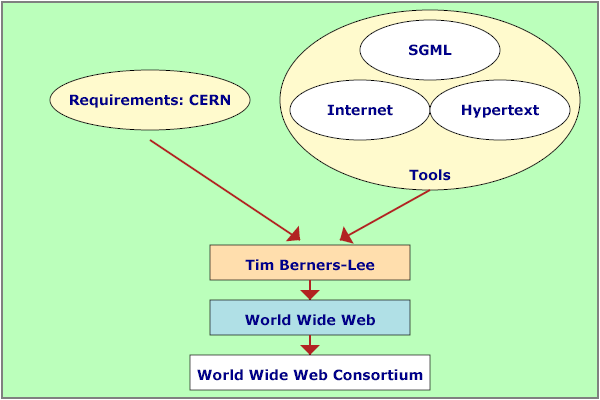

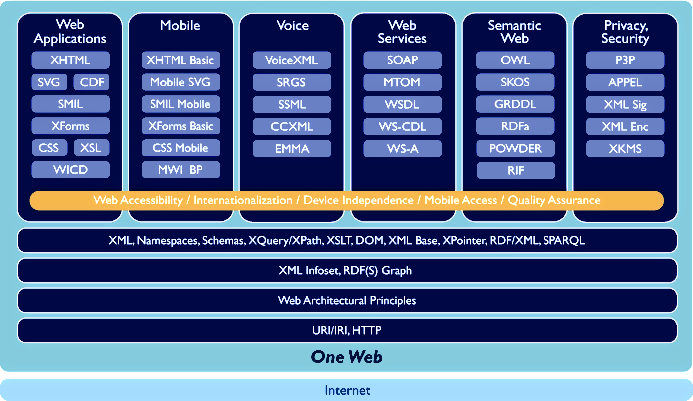

- • The XML technology

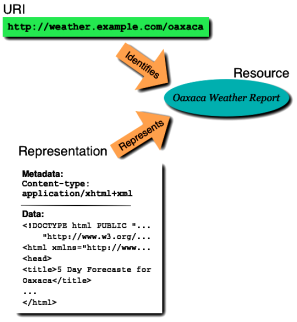

- • Metadata and RDF

- • Ontology

- • Semantic Web Technologies

- • Semantic Web based Services

- • Knowledge Management

- • References

- • Bentley-MicroStation CAD

- • Die XML - Technologie

- • Metadata und RDF

-

• AKTUELLE SEITE

- • Semantik Web based Services

- • Wissensmanagement

- • Referenzen